Why Mesh Optimization is Key for High-Quality 3D Assets

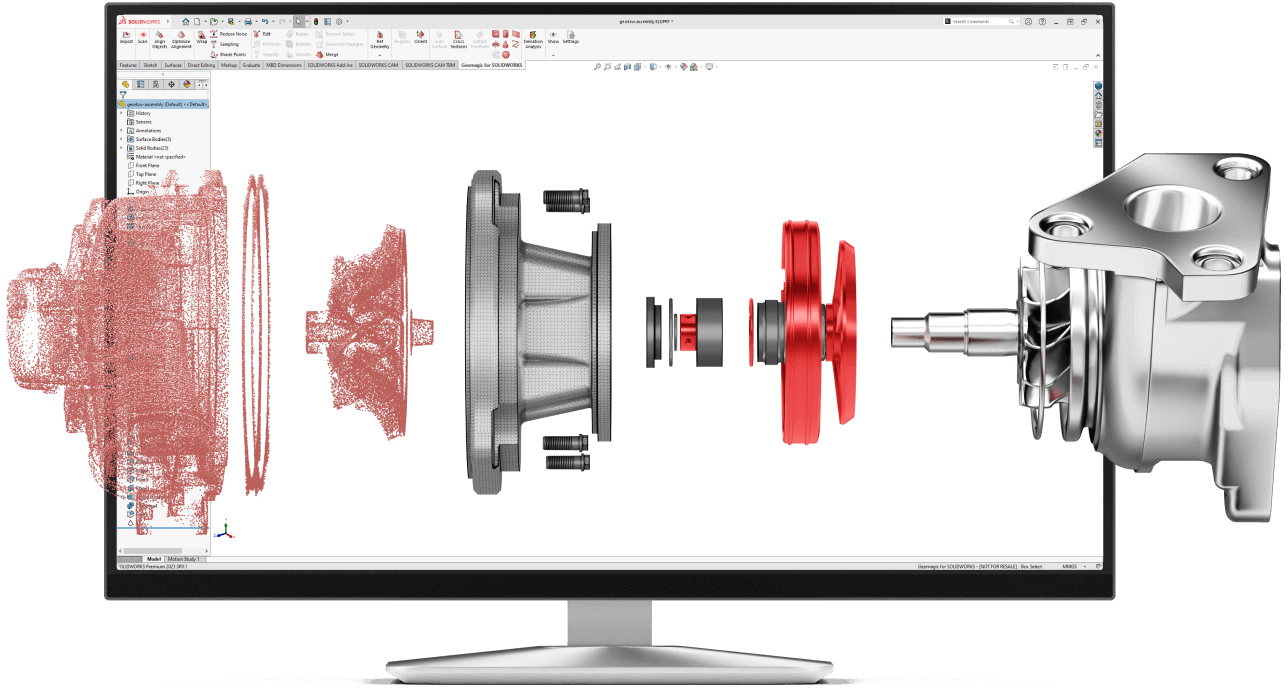

In the rapidly evolving landscape of digital creation, 3D assets have become indispensable across a multitude of sectors, from immersive gaming environments and augmented reality experiences to detailed product visualizations and digital twins for industrial applications. The demand for high-fidelity, visually stunning, and functionally robust 3D models continues to escalate. However, the journey from raw data capture or initial design to a polished, deployable asset is often fraught with technical complexities. Unprocessed 3D scans or CAD models, while rich in detail, frequently arrive with an overwhelming number of polygons, topological inconsistencies, and inefficient data structures. This initial state, though comprehensive, presents significant hurdles for practical use, impacting performance and storage requirements.

Historically, the creation of 3D models was a painstaking manual process, often involving artists meticulously crafting every vertex and face. With the advent of advanced scanning technologies and procedural generation tools, the volume and complexity of raw 3D data have grown exponentially. While these technologies offer unparalleled detail and accuracy, they also introduce a new set of challenges related to data management and optimization. Researchers have long explored methods to simplify complex geometries without sacrificing critical visual information, aiming to strike a delicate balance between fidelity and computational efficiency. Early attempts often led to noticeable degradation in quality, highlighting the need for more sophisticated algorithms that could intelligently reduce polygon counts while preserving the model's essential characteristics.

The core issue lies in the inherent trade-off: a higher polygon count generally equates to greater visual detail, but also demands more processing power and storage space. For real-time applications, such as video games or interactive AR/VR experiences, unoptimized models can lead to significant performance bottlenecks, causing frame rate drops and a subpar user experience. Even for offline rendering or archival purposes, excessively large file sizes can complicate data transfer, storage, and long-term management. This fundamental challenge has driven continuous innovation in the field of 3D graphics, with a particular focus on developing robust mesh optimization techniques that can address these concerns systematically and effectively, transforming raw data into highly functional assets.

Previous studies and industry practices have consistently underscored the critical role of refining 3D meshes. The objective is not merely to reduce polygon count but to enhance the overall utility and aesthetic quality of the digital asset. This involves a suite of techniques designed to make models lighter, cleaner, and more efficient without compromising their integrity. The evolution of these methods reflects a growing understanding of how geometry impacts rendering pipelines, user interaction, and the broader digital ecosystem. It's a continuous quest to enable seamless integration of complex 3D data into diverse platforms, ensuring that visual excellence is paired with optimal performance, making digital content accessible and engaging for a wider audience and range of applications.

Key Insights from the Field 💡

- Unoptimized meshes significantly impede application performance, leading to sluggish load times and reduced frame rates, which directly detracts from user engagement and overall system responsiveness.

- Excessive polygon counts result in cumbersome file sizes, complicating storage, distribution, and real-time streaming, thereby increasing operational overheads for digital asset pipelines.

- Effective mesh optimization is not just about reduction; it's about intelligent simplification that maintains visual integrity and crucial geometric features, ensuring the asset remains true to its original form.

Unpacking the Significance of Refined Geometry 🔍

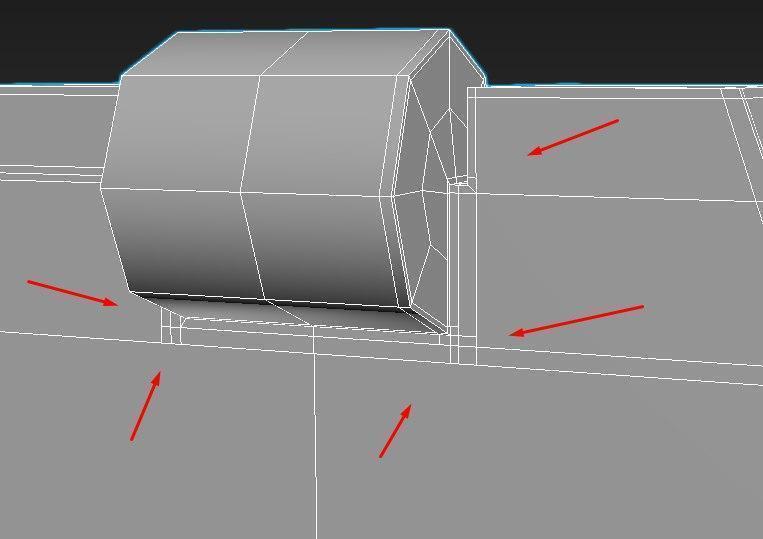

The analysis of various optimization strategies reveals a consistent pattern: the careful reduction of geometric complexity directly correlates with improved operational fluidity. Techniques like decimation, which selectively removes vertices and faces while attempting to maintain visual shape, are fundamental. However, the effectiveness of decimation heavily depends on the algorithm's ability to identify and preserve critical features such as sharp edges and intricate surface details. Poorly executed decimation can result in models that appear blocky or lose their characteristic contours, undermining the very purpose of high-fidelity asset creation. This balance is paramount, especially when models are intended for close-up viewing or detailed interaction.

Beyond simple polygon reduction, remeshing offers a more holistic approach by rebuilding the mesh from scratch, often generating a new topology that is more uniform and clean. This is particularly beneficial for models derived from raw scan data, which often exhibit irregular triangulation and noise. A well-remeshed model not only has a lower polygon count but also boasts a more consistent and animation-friendly structure. This consistency is vital for applications requiring deformations or precise texture mapping. The debate often centers on how much automation can be applied to remeshing before manual intervention becomes necessary to correct subtle inaccuracies or artistic intentions.

The impact of mesh optimization extends far beyond mere aesthetics; it fundamentally influences the usability and accessibility of 3D content. Consider a large-scale digital twin project or an extensive e-commerce catalog. Without efficient models, rendering these environments or loading product views would be prohibitively slow, hindering user experience and limiting scalability. The challenge lies in developing tools that can automate much of this complex process while still allowing for fine-grained control when precision is paramount. ScanHub Cloud recognizes this critical need, providing solutions that empower creators to achieve this delicate equilibrium without extensive manual labor.

One of the more contentious areas in mesh optimization involves the perception of detail. While a higher polygon count objectively means more geometric information, human perception often doesn't require every single vertex to convey realism. Techniques like normal mapping and displacement mapping allow for the illusion of high detail on a low-polygon mesh, offloading complexity from geometry to textures. The controversy arises when deciding the optimal "sweet spot" – how much geometric detail is truly necessary before these texture-based methods become more efficient and visually convincing. This decision often varies significantly depending on the target platform, viewing distance, and the specific artistic style of the project.

The ongoing evolution of rendering technologies and hardware capabilities continuously reshapes the optimization landscape. What was considered an "optimized" model a few years ago might now be considered inefficient. This dynamic environment necessitates adaptable and intelligent optimization workflows. Tools must be capable of generating multiple levels of detail (LODs) automatically, allowing applications to switch between different versions of a model based on its distance from the camera or its importance in the scene. This adaptive approach is crucial for maintaining consistent performance across diverse hardware configurations and complex virtual environments, ensuring a smooth and immersive experience for all users.

Ultimately, the objective of mesh optimization is to unlock the full potential of 3D assets. It's about ensuring that these digital creations are not just visually appealing but also performant, versatile, and future-proof. By meticulously refining the underlying geometry, we enable developers and designers to push the boundaries of what's possible in interactive experiences, simulations, and visualizations. The commitment to high-quality, optimized assets is a commitment to superior digital experiences, paving the way for more innovative and engaging applications across all industries. ScanHub Cloud is dedicated to providing the foundational tools for this critical transformation.

Practical Applications and Future Directions 🚀

- Enhanced Performance Across Platforms: Optimized models ensure seamless integration and smooth rendering in real-time applications like AR/VR, gaming, and interactive web experiences, boosting user satisfaction.

- Streamlined Data Management: Reduced file sizes facilitate easier storage, faster transfer, and more efficient handling of large asset libraries, cutting down on infrastructure demands and operational complexities.

- Foundation for Advanced Workflows: Clean, optimized meshes provide a robust base for subsequent processes such as animation, rigging, and texture baking, leading to higher quality final products and more efficient production cycles.

Related posts

Comments